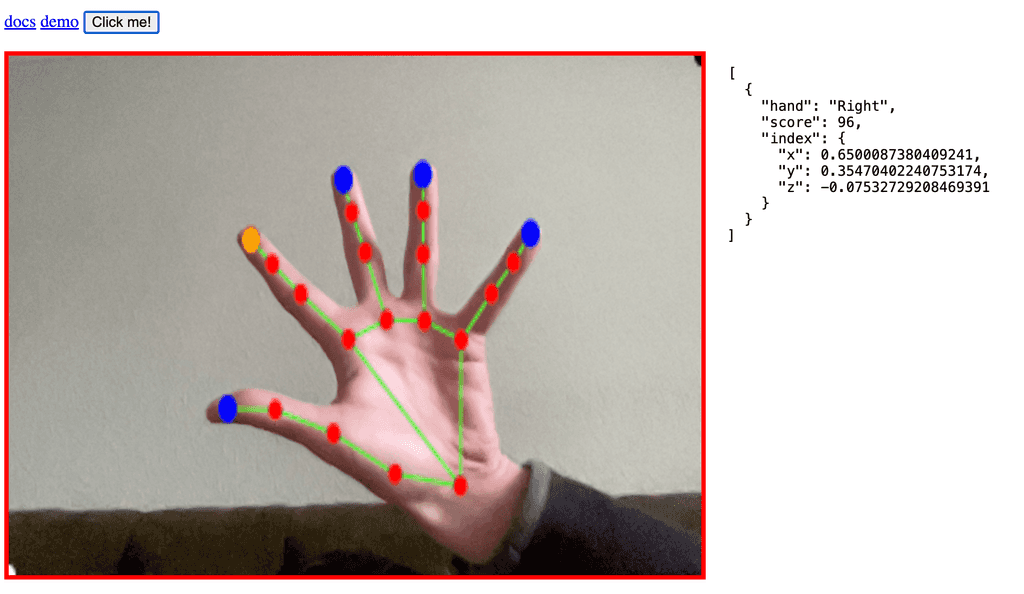

MediaPipe Hand Recognition

Awesome MediaPipe library has built in hand detection which may be run right inside browser

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>hands</title>

<script src="https://cdn.jsdelivr.net/npm/@mediapipe/drawing_utils/drawing_utils.js" crossorigin="anonymous"></script>

<script src="https://cdn.jsdelivr.net/npm/@mediapipe/hands/hands.js" crossorigin="anonymous"></script>

<style>

.container {

position: relative;

border: 4px solid red;

}

.container,

#webcam,

#canvas {

display: block;

width: 640px;

aspect-ratio: 4/3;

}

#canvas {

position: absolute;

left: 0;

top: 0;

right: 0;

bottom: 0;

}

/* flip everything */

#webcam,

#canvas {

transform: rotateY(180deg);

}

</style>

</head>

<body>

<div style="display: flex; gap: 20px">

<div class="container">

<video id="webcam" autoplay playsinline></video>

<canvas id="canvas"></canvas>

</div>

<div>

<pre id="res"></pre>

</div>

</div>

</body>

<script type="module">

import { HandLandmarker, FilesetResolver } from "https://cdn.jsdelivr.net/npm/@mediapipe/tasks-vision@0.10.0";

const btn = document.getElementById("btn");

const video = document.getElementById("webcam");

const canvas = document.getElementById("canvas");

const ctx = canvas.getContext("2d");

// step 1: first we need to load the model

const vision = await FilesetResolver.forVisionTasks("https://cdn.jsdelivr.net/npm/@mediapipe/tasks-vision@0.10.0/wasm");

const handLandmarker = await HandLandmarker.createFromOptions(vision, {

baseOptions: {

modelAssetPath: `https://storage.googleapis.com/mediapipe-models/hand_landmarker/hand_landmarker/float16/1/hand_landmarker.task`,

delegate: "GPU",

},

runningMode: "VIDEO",

numHands: 2,

minHandDetectionConfidence: 0.9, // 0.5 default

});

// step 2: after model is loaded, enable button and attach click listener

btn.disabled = false;

btn.addEventListener("click", async () => {

// step 3.1: once clicked, capture web cam

video.srcObject = await navigator.mediaDevices.getUserMedia({ video: true });

// step 3.2: and attach listened to load event

video.addEventListener("loadeddata", recognizeHands, { once: true });

});

let lastVideoTime = -1;

let results = undefined;

// step 4: once we have video stream - recognize and render hands on canvas

async function recognizeHands() {

// step 4.1: recognize

let startTimeMs = performance.now();

if (lastVideoTime !== video.currentTime) {

lastVideoTime = video.currentTime;

results = handLandmarker.detectForVideo(video, startTimeMs);

}

// step 4.2: render

ctx.save();

ctx.clearRect(0, 0, canvas.width, canvas.height);

if (results.landmarks) {

for (const landmarks of results.landmarks) {

drawConnectors(ctx, landmarks, HAND_CONNECTIONS, { color: "#00ff00", lineWidth: 1 });

drawLandmarks(ctx, landmarks, { color: "#FF0000", radius: 1 });

// example: rendering some random dots

drawLandmarks(ctx, [{ x: 1, y: 1, z: 1 }], { color: "#FFFFFF", radius: 1 }); // left bottom corner

drawLandmarks(ctx, [{ x: 0, y: 0, z: 0 }], { color: "#000000", radius: 1 }); // top right corner

}

}

// example: rendering finger tips as blue circles

if (results.landmarks) {

const FINGER_TIP_INDICES = [4, 8, 12, 16, 20];

for (const landmarks of results.landmarks) {

const tips = FINGER_TIP_INDICES.map((i) => landmarks[i]).filter(Boolean);

drawLandmarks(ctx, tips, { color: "#0000FF", radius: 2 });

}

}

// example: loggins finger tips

if (results.landmarks && results.handednesses) {

const out = [];

for (let h = 0; h < results.landmarks.length; h++) {

const handedness = results.handednesses[h]?.[0];

if (!handedness) continue;

// flip because of mirror

const hand = handedness.categoryName === "Left" ? "Right" : "Left";

out.push({

hand,

score: Math.round(handedness.score * 100),

// thumb: results.landmarks[h][4] ?? null,

index: results.landmarks[h][8] ?? null,

// middle: results.landmarks[h][12] ?? null,

// ring: results.landmarks[h][16] ?? null,

// pinky: results.landmarks[h][20] ?? null,

});

}

document.getElementById("res").textContent = JSON.stringify(out, null, 2);

}

// example: orange index fingers

if (results.landmarks) {

const indexFingersLandmarks = results.landmarks.map((landmarks) => landmarks[8]);

drawLandmarks(ctx, indexFingersLandmarks, { color: "orange", radius: 2 });

}

ctx.restore();

// step 4.3: call this function again to keep predicting when the browser is ready.

window.requestAnimationFrame(recognizeHands);

}

</script>

</html>